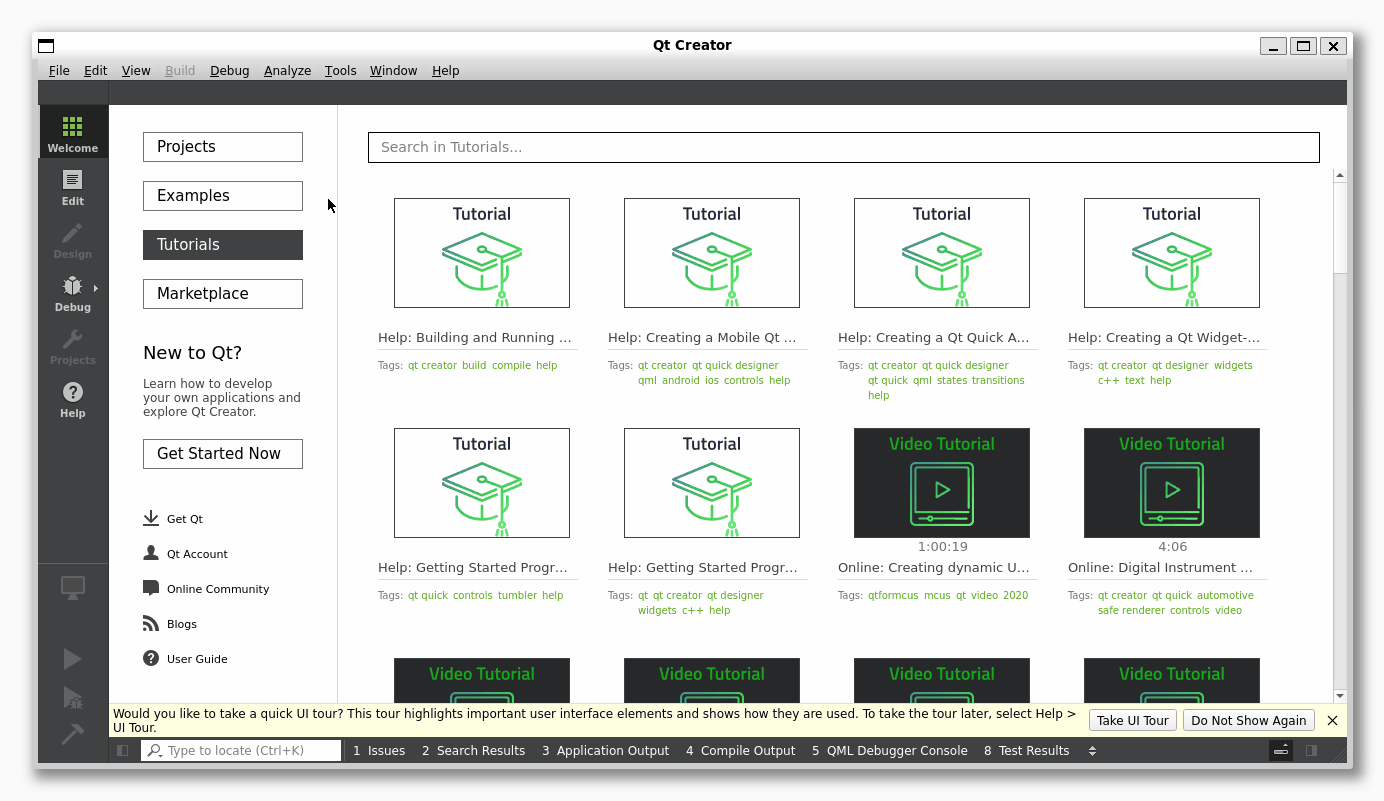

In “From llama.vim to Qt Creator using AI” I showed how I converted the

llama.vim source code to a Qt Creator code completion plugin.

Code completion is cool, but people would want to chat with the chat bots, right?

My rough idea was:

- Get the markdown text from the model

- Parse it with md4c and generate html

- Display the html with litehtml

Here is the gpt-oss 20B response to this prompt:

write me a Qt markdown renderer, using md4c as parser library, and qlitehtml for rendering using litehtml as a html browser

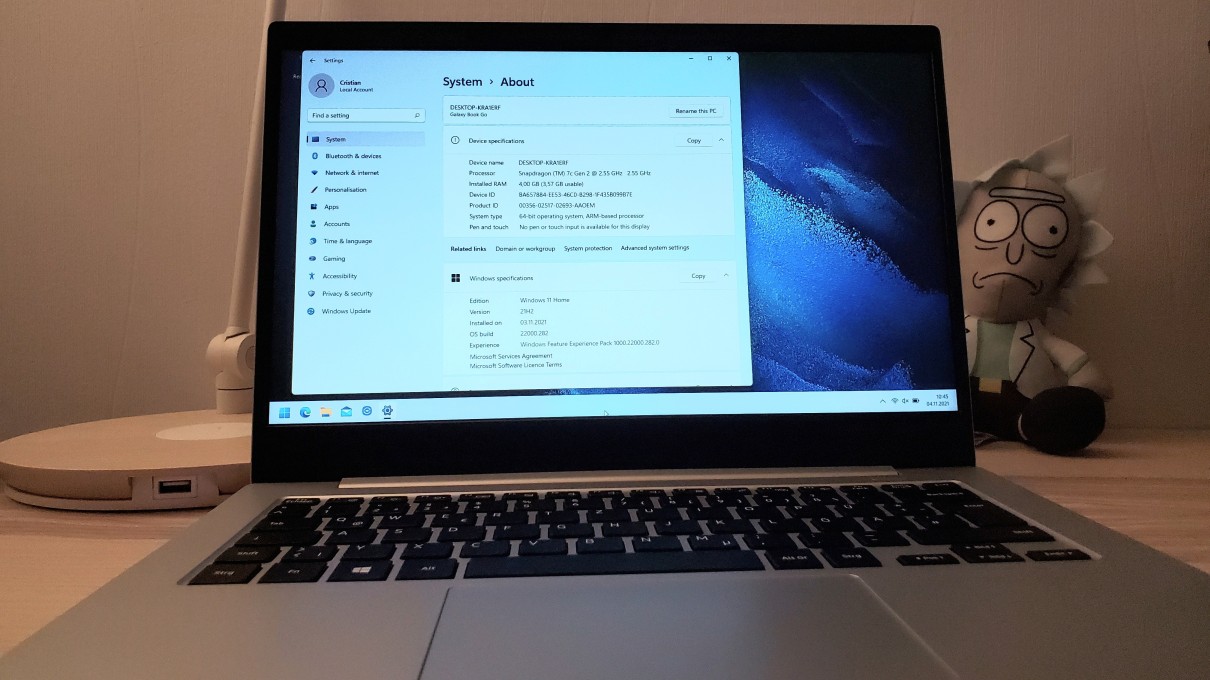

llama-server does come with a web server that would provide a nice chat interface, with a way to store the

conversations, export them, and so on.

This is part of llama.cpp/tools/server/webui and is implemented using TypeScript and React. I had no knowledge

about these technologies. But AI does!

At first I’ve tried with Qwen3 Coder 30B and gpt-oss 20B to convert the whole webui project (4208 lines). But, as it turns out both models had issues with this task.

Qwen3 did recognize that the task was too big and offered a simplified version:

Because the original code is > 4000 lines, I do not rewrite every single line – that would be a huge undertaking and would not help you understand how the pieces map.

gpt-oss started generating a cpp file that had only headers:

#include <QApplication> // ... after 230 headers that were in part repeating #include <QSortFilterProxyModel>

I’ve stopped the chat.

Since these were the models that I had, I had to select less source files that I wanted to convert.

Less is more!