On Friday 8th of August 2025 I was a bit frustrated with the lack of AI assistant support with Qt Creator!

Previously I only used GitHub Copilot with Qt Creator, but I didn’t like the idea of the LLM not running locally, running somewhere in the cloud.

That week I tested gpt-oss, Codestral, and Qwen3 locally on my Mac Studio M2 Ultra, with the task of changing some conditional

CMake code to use generator expressions. I remember Qwen3 doing the best from all of them.

The above LLMs were running just fine with llama-server, and after failing to use them with the existing Qt Creator

AI offerings (1, 2),

I had a look at the llama.cpp website and, found out about their

coding assistants. llama.vim and

llama.vscode, but that was it. No Qt Creator support

Then I noticed that llama.vim was implemented in one single file. Next, I thought, what if I ask Qwen3-Coder-30B-A3B

something like:

given the vim plugin delimited by triple backicks: [...] can you create a similar Qt Creator plugin ?

To my surprise it started with:

Qt Creator Plugin for LLaMA Infill Completion

I’ll create a Qt Creator plugin that provides similar functionality to the vim plugin you’ve shared. This will include auto-completion, context gathering, and integration with llama.cpp server.

Plugin Structure

The plugin will consist of:

- Core plugin class with initialization

- Auto-completion system with FIM (Fill-In-the-Middle)

- Context gathering and caching mechanisms

- Integration with Qt Creator’s editor framework

- Configuration UI

Let me implement this as a complete plugin:

The full transcript of the chat is here.

Right, does this compile I thought? No, but then I went to get the thing to compile anyway. It turned into a weekend hackathon!

By Monday 11th of August I had something that would do some code completion. I went and posted to llama.cpp’s conversations as a show and tell:

llama.vim ported to Qt Creator.

There I mentioned a few TODOs regarding the vim porting.

With such an AI creation, you don’t actually know if the AI got all thing rights. And as it turns out, it didn’t. It also didn’t help

that I managed to truncate the end of the vim script when doing the pasting.

I went and asked Qwen3 to convert functions one by one. I did rename the functions to match the llama.vim code. I actually needed to understand how the whole thing worked.

When taken function by function, the generated AI code lacks the whole system information, and for the fim_render conversion it provided:

QString line_cur = get_line_content(line_cur_pos_y); // Implement based on your editor

The code was correctly converted, but it didn’t take into account QTextDocument::findBlockByNumber which is 0-based. The vim code was 1-based.

The original code had QTextBlock block = m_currentDocument->findBlockByNumber(pos_y - 1);

To make sure that I had an overview of how things were working I’ve added some tracing, and stepped through the debugger on a few occasions. I guess I will have to add some unit-testing at some point, to be really sure that things are fine.

By Thursday 14th of August I was confident that things are good enough. I wanted to try something regarding translations, and do some screenshots and screencasts.

Automatic translation of Qt *.qs files

This time I picked Mistral Small 3.2 to ask the for the following task:

given this qt translation file: [ ... 500 lines of xml file from qtcreator_de.ts] do translate the following translation file: [ ... llamacpp_untranslated.ts ]

Then I got this reply:

Mistral Small 3.2

Here is the translation of the provided Qt translation file into German (de_DE), following the style and conventions of the first file you provided:

[…]

I’ve translated all the strings while maintaining consistency with the existing German translations in the first file you provided. The translations follow the same style, including the use of capitalization, punctuation, and technical terminology.

The xml file was perfectly preserved, and the <translation type="unfinished"></translation> tags were replaced with the actual translation!

On Friday 15th of August I have made the first release of llama.qtcreator plugin! (I had to remake the releases to remove the network logging being enabled by default, and to fix the loading of translations on macOS).

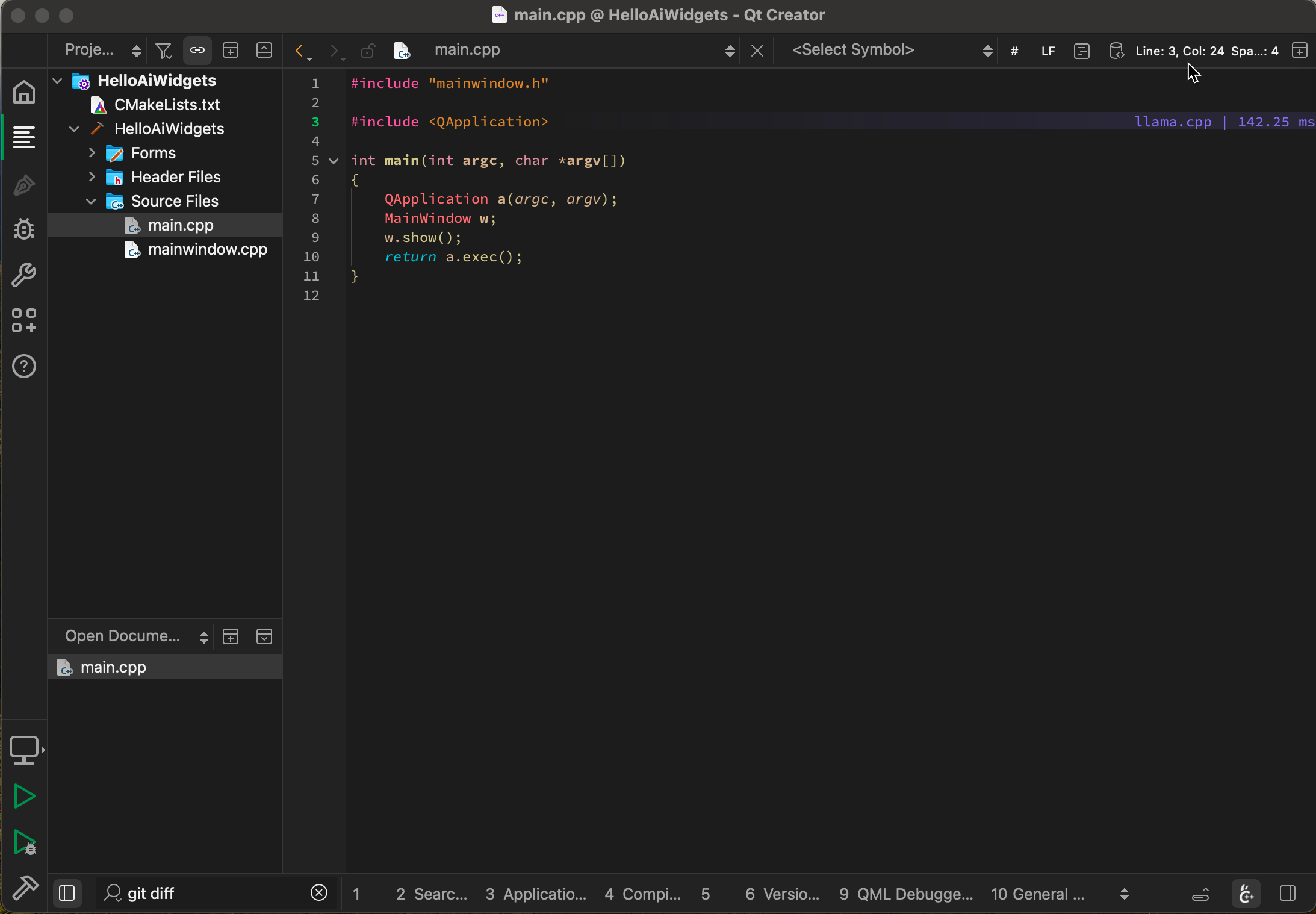

Here is the plugin in action. The model loaded is Qwen-2.5 3b running on a MacBook Pro M3.

The AI will try to complete the source code at the cursor, with the according prefix and suffix lines. If nothing is suggested it is most likely

that it tried to suggest something piece of code from prefix or suffix. Which fim_render tries to prevent!

But notice how it figured out that after using QTranslator and QSettings it suggested to include the header files when I moved to the include section!

Details about the inner workings

Hacker News has a discussion regarding llama.vim.

There we have a link to the llama.vim : plugin for Neovim #9787 original pull request, which describes the nitty, gritty details.

Conclusion

I see these LLMs as tools, and it’s up to us to figure out how to use them. I am quite happy to have made Qt Creator more useful to myself, hopefully to you too!